I’ve been thinking about this topic for a few days. I believe it’s a huge issue that spans multiple sectors: infrastructure, server cluster controllers, content delivery networks (CDNs), and even the physical distance between users and AI server farms.

Physical Distance Between AI Infrastructure and Users

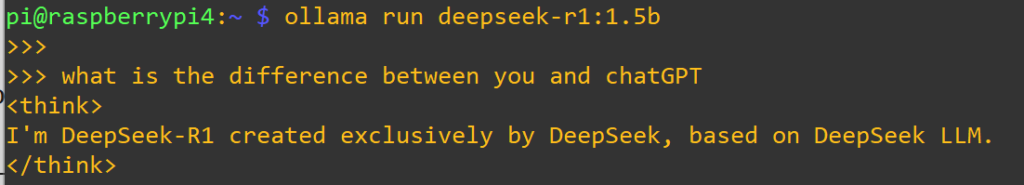

We need to understand how we use AI Large Language Models (#LLM) like #ChatGPT or #Grok. Users access the web or a mobile application and input a query into the AI LLM. The AI server farm then processes the query and sends back a response to the user.

In this interaction, how far away is the user from the AI infrastructure? And how much latency does this distance create?

We might think about whether a CDN could help deliver responses (via caching) to users in order to reduce latency. However, replies and generated images are often dynamic content, which typically cannot be cached effectively. Of course, there are techniques like WAN acceleration, byte caching, or session caching that can improve efficiency to some extent.

That said, we all know CDNs still provide important functionality beyond caching, such as DDoS protection and DNS services.

According to reports, the top six AI infrastructure hubs in the world are located in the United States, Canada, Norway and Sweden, Singapore, India, and the United Arab Emirates [https://www.rcrwireless.com/20250501/fundamentals/top-ai-infrastructure]. That said, users generally do not know where the queried LLM model is actually running. To determine this, deeper measurements—such as DNS queries and web interaction analysis—would be required. Regardless, long-distance traffic transmission between regions is still unavoidable.

Internet infrastructure and Edge Computing

If the above assumption is correct, what role can Internet infrastructure and Tier 1 network operators play? Additional network deployment will certainly be required to support the high bandwidth demands of AI usage. However, this is not only a matter of bandwidth — latency is equally important.

In the earlier era of the web and HTTP content delivery, the processing requirements were relatively lightweight. A cache server could be deployed at the “edge” to serve static or semi-dynamic web content for multiple users in a given region. This model works well for traditional CDNs.

For AI, however, the situation is different. While lightweight LLMs might be deployed on edge computing nodes—provided those nodes have sufficient processing capability—large-scale AI infrastructure is far more demanding. Deploying an AI system with thousands of GPUs worldwide is not straightforward. Beyond hardware availability, challenges include massive power consumption and the cooling requirements of such clusters, which vary greatly depending on the region.

It’s an interesting question: how do we bring the scale of AI infrastructure closer to the edge while balancing efficiency, cost, and sustainability?

A CDN provider has already taken action in collaboration with several AI companies. The AI Gateway or MCP Gateway could help address those technical questions. Let’s take a look at the latest update:

[https://blog.cloudflare.com/ai-gateway-aug-2025-refresh/] [https://blog.cloudflare.com/how-cloudflare-runs-more-ai-models-on-fewer-gpus/?utm_campaign=cf_blog&utm_source=linkedin&utm_medium=organic_social&utm_content=20250827/] #20250827

AI Infrastructure, Controllers and Network Nodes

Building on the above points, it’s important to note that most AI infrastructure today is already quite mature. The robust frameworks developed during the Cloud Computing era—such as VXLAN, Kubernetes, and other cluster node controllers and load balancer as well—provide a strong foundation for managing large-scale AI workloads.

Major cloud providers like Google, AWS, and Microsoft have already demonstrated the stability and scalability of these architectures, showing how well-established the underlying infrastructure has become.

Managing a stable cluster node controller capable of handling millions of AI requests is truly an art of operations. It requires careful consideration of latency at every level, including the low-level hardware channels.

When a request is processed, the load balancer sends it to the appropriate nodes through the infrastructure’s network devices and routing protocols. The nodes then process the request, consolidate the results, and send the final response back to the user. Each of these steps is interrelated, and optimizing them is crucial for delivering fast, reliable AI responses.

Some researchers have started studying how to reduce—or determine the necessary speed of—latency in these processes. This spans multiple layers: operating systems, RAM, GPU channels, network connections, network protocols, control and data planes, fiber channels, and more.

If all distributed nodes could be interconnected with specialized high-speed links—such as PCIe, ASIC boards, Scalable Link Interface (SLI), or CrossFire links—what would that mean for latency? It’s an interesting thought experiment worth exploring. In the latest Nvidia blog, the Quantum-X InfiniBand Photonics was introduced as an integrated fiber-optic switch, which reduces the electrical-to-optical conversion between the switch and the fiber at the optical transceiver. [ https://developer.nvidia.com/blog/scaling-ai-factories-with-co-packaged-optics-for-better-power-efficiency/?ncid=so-link-416360&linkId=100000378640319]. I believe that while it may initially be used only in AI infrastructure, one day it could become essential for every Tier 1 network infrastructure.

Open discussion.